Oct 2025

Python Neural Network from Scratch

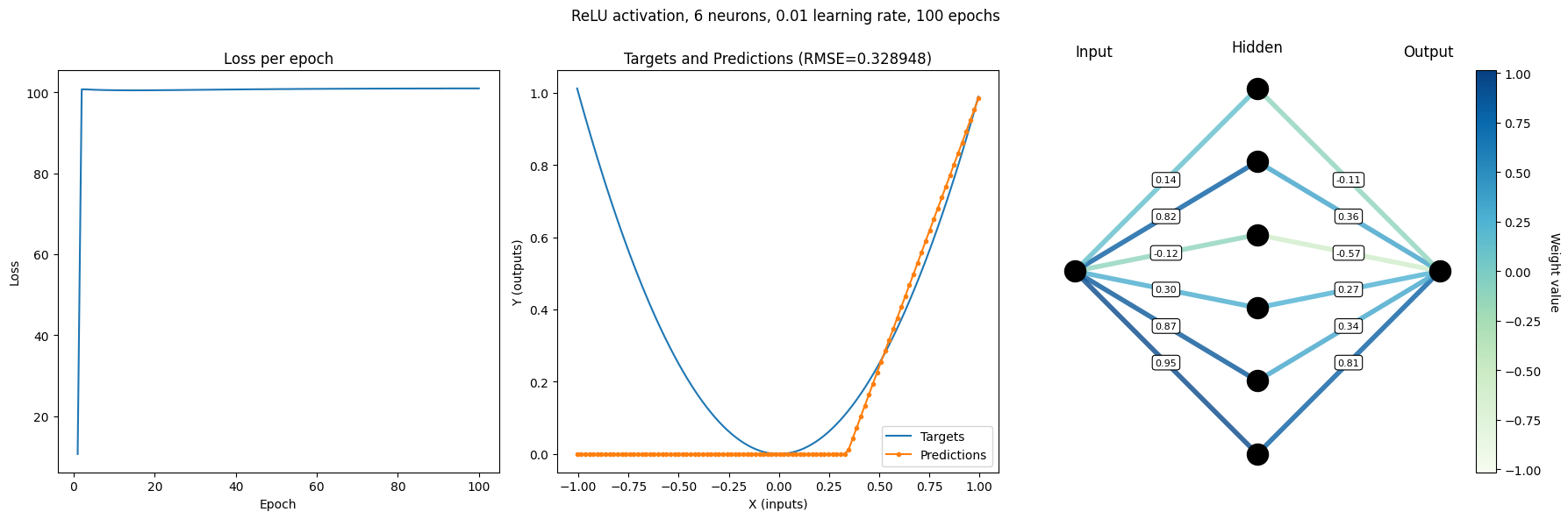

Diagram showing weights between neurons. This simplified view is possible because the neural network has only one input neuron, one hidden layer, and one output neuron. If the network were more complex, it would be impractical to show all the weights in this manner.

The goal of this project was to code a single-hidden-layer neural network (NN) in Python with only the NumPy library (and matplotlib and tqdm). My motivation was to gain a better grasp of the fundamentals of neural networks, as well as develop intuition about hyperparameters, neuron counts, and activation functions.

The specific problem that this neural network attempts to solve is approximating the function f(x) = x^2. This is done by an NN with 1 input neuron, 1 output neuron, and some number of hidden neurons.

I wrote all of the code for this project myself (without generative AI), with the exception of some matplotlib functions used for visualizing results.

After writing the code for building, training, and evaluating single-hidden-layer neural networks, I conducted numerous experiments by trying different:

Activation functions.

Neuron counts.

Learning rates.

In doing so, I discovered some intriguing nuances and phenomena:

Different activation functions often have different optimal learning rates.

Single-layer neural networks are limited in ways that multi-layer ones aren’t.

Some activation functions are better at taking advantage of additional width (more neurons) than others.

Depending on the situation (function approximation, classification, etc.), certain activation functions can perform much better than others.

A hyperbolic tangent (tanh) activation function performs relatively well.

A rectified linear (ReLU) activation function performs extremely poorly.

A quadratic activation function performs perfectly since the quadratic activation function is approximating itself.

Writing the code for this project (by hand) and running the experiments above was eye-opening. By exploring the fundamental aspects of neural networks (especially back-propagation), I now have a much clearer understanding of the inner-workings and complexity of neural networks, as well as the reasons why they work so well in some situations, but fail outright in others.